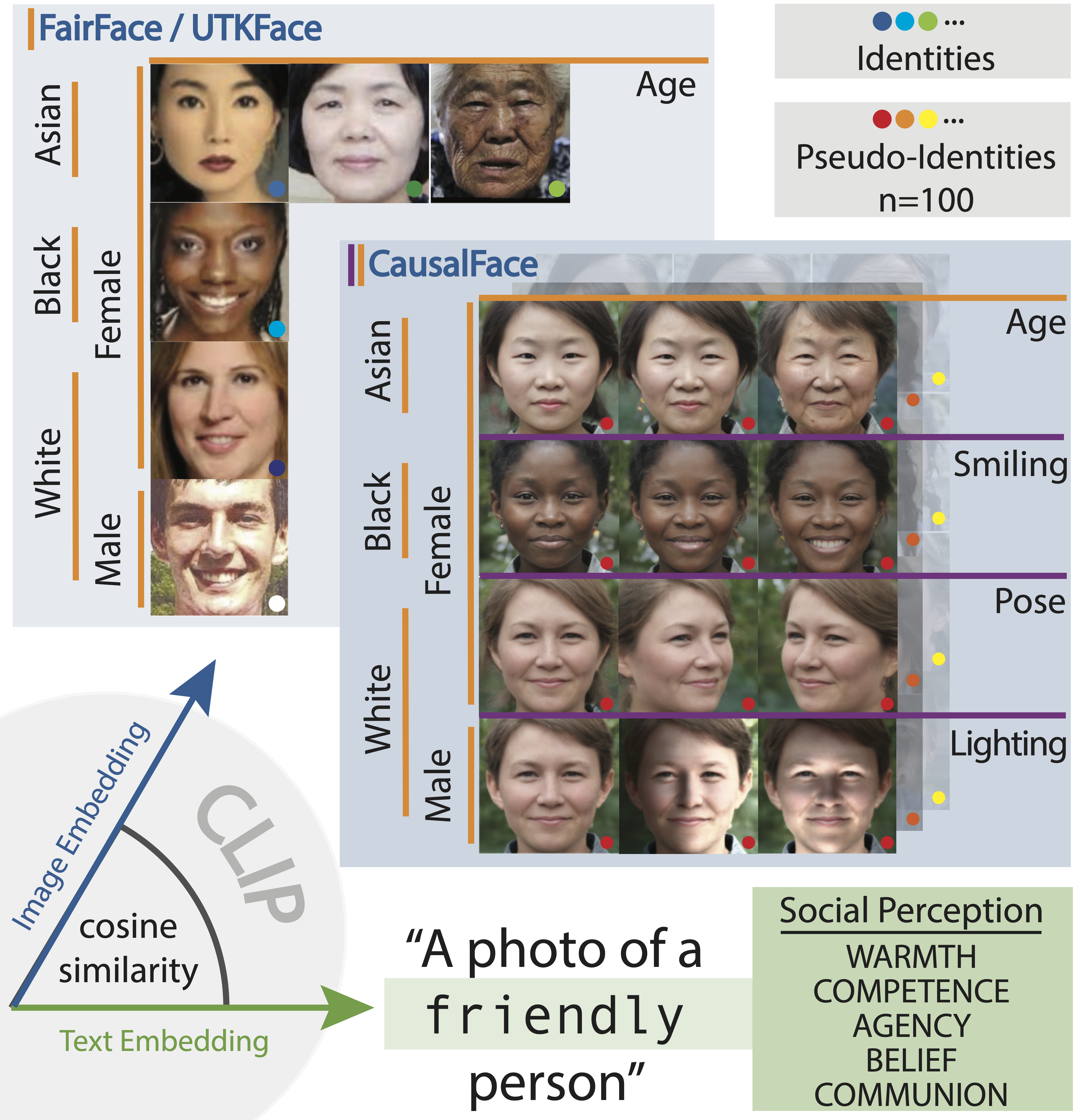

We explore social perception of human faces in CLIP, a widely used open-source vision-language model. To this end, we compare the similarity in CLIP embeddings between different textual prompts and a set of face images. Our textual prompts are constructed from well-validated social psychology terms denoting social perception. The face images are synthetic and are systematically and independently varied along six dimensions: the legally protected attributes of age, gender, and race, as well as facial expression, lighting, and pose. Independently and systematically manipulating face attributes allows us to study the effect of each on social perception and avoids confounds that can occur in wild-collected data due to uncontrolled systematic correlations between attributes.

Our findings are experimental rather than observational.

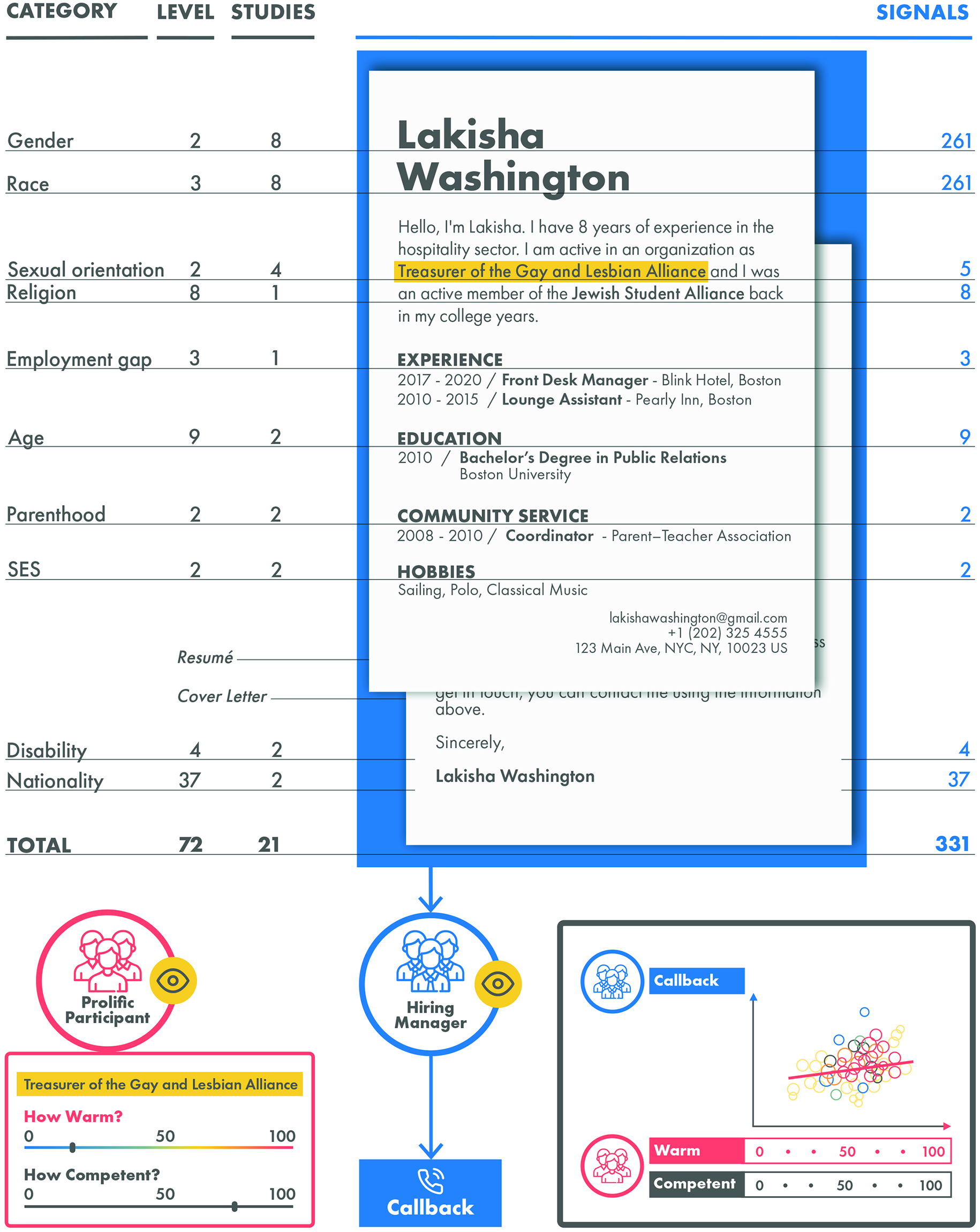

Our main findings are three. First, while CLIP is trained on the widest variety of images and texts, it is able to make fine-grained human-like social judgments on face images. Second, age, gender, and race do systematically impact CLIP’s social perception of faces, suggesting an undesirable bias in CLIP vis-a-vis legally protected attributes.

Most strikingly, we find a strong pattern of bias concerning the faces of Black women, where CLIP produces extreme values of social perception across different ages and facial expressions.

Third, facial expression impacts social perception more than age and lighting as much as age.

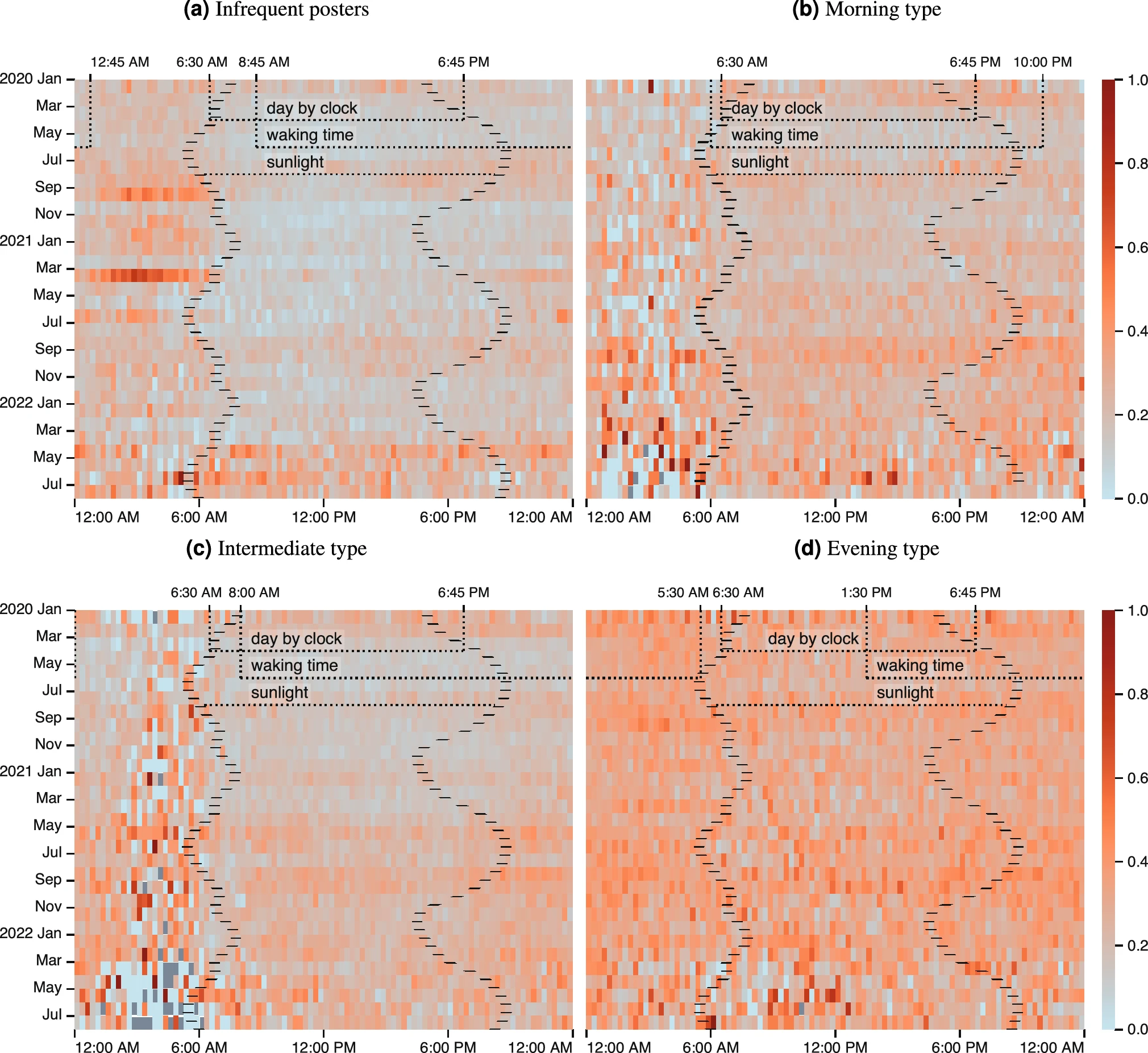

Protected attributes (orange) and non-protected ones (purple) cause comparable variation in the CLIP embedding. Variation is quantified using a bootstrapping method, calculating the absolute value of $\Delta$ cosine similarity differences between two images compared to each of the valenced text prompts used in our study. The two randomly selected images are distinct for exactly one attribute. For instance, to generate the box plot for ``smiling’’, two images of the same pseudo-identity with varying smiling values are compared. The gray box plot (seed) illustrates variation when pseudo-identities are changed while other attributes are maintained constant.

Boxes are arranged by median values (lines), and means are indicated by triangles.

Protected attributes (orange) and non-protected ones (purple) cause comparable variation in the CLIP embedding. Variation is quantified using a bootstrapping method, calculating the absolute value of $\Delta$ cosine similarity differences between two images compared to each of the valenced text prompts used in our study. The two randomly selected images are distinct for exactly one attribute. For instance, to generate the box plot for ``smiling’’, two images of the same pseudo-identity with varying smiling values are compared. The gray box plot (seed) illustrates variation when pseudo-identities are changed while other attributes are maintained constant.

Boxes are arranged by median values (lines), and means are indicated by triangles.

The last finding predicts that studies that do not control for unprotected visual attributes may reach the wrong conclusions on bias.

Our novel method of investigation, which is founded on the social psychology literature and on the experiments involving the manipulation of individual attributes, yields sharper and more reliable observations than previous observational methods and may be applied to study biases in any vision-language model.

Citation

Link to our paper: arXiv

Hausladen CI, Knott M, Camerer CF, Perona P. Social perception of faces in a vision-language model. arXiv preprint arXiv:2408.14435. 2024 Aug 26.